Designing a fault tolerant system in a loosely coupled system based on async calls can be quite challenging, usually certain trade offs must be made between resilience and performance. The usual challenge faced while designing such a system is missed/unprocessed calls resulting in data drift, This exponentially increases over time eventually turning the system unusable.

Use Case:

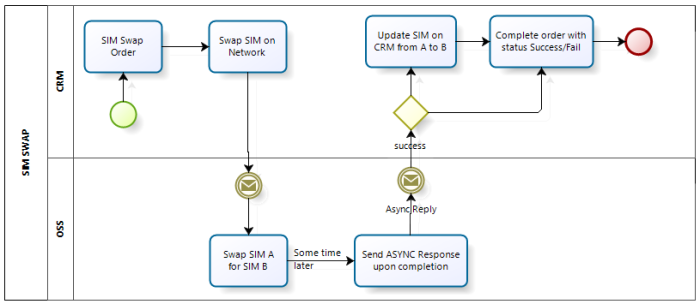

GSM customer swapping his SIM card.

- SIM migration order is created.

- Order processing starts, and SIM swap call is sent to network elements.

- Customer’s SIM is swapped but response from network elements is missed/not sent.

- CRM order is cancelled by customer care.

- Customer now has two different SIMs associated with his account, the one he is using listed in Network, and his old SIM card on CRM.

- All subsequent orders will fail since the customer’s service account is inconsistent through the BSS stack.

One way to prevent such an issue from happening all together is to lock the customer for editing until the SIM swap request is completed from network, and if a failure happens during SIM swap the customer remains locked until resolved manually, this approach is called Fault Avoidance, and its quite costly performance wise, also it provides a really poor customer experience.

Fault Tolerance on the other hand allows for such incidents to take place but the system prevents failure from happening. In my opinion the best pattern to handle faults in loosely coupled systems is check-pointing.

Checkpointing is a technique in which the system periodically checks for faults or inconsistencies and attempts to recover from them, thus preventing a failure from happening.

Check-pointing pattern is based on a four-stage approach:

- Error detection

- Damage assessment and confinement (sometimes called “firewalling”)

- Error recovery

- Fault treatment and continued service

If this approach sounds familiar its because its been in use for quite sometime now in SQL (a loosely coupled system between client and DB Server), to retain DB consistency in the event of a faults during long running queries the following steps are taken :

- Client session termination is detected (step 1 detection).

- Does user have any uncommitted DML queries (step 2 assessment).

- Access undo log and pull out data needed to rollback changes (step 3 recovery).

- Rollback changes and restore data consistency (step 4 fault treatment).

Checkpoint Roll-Back:

The pattern used by DBMSs, Checkpoint-rollback Scenario relies on taking a snap shot of the system at certain checkpoints through the process flow and upon failure between two checkpoints restoring the snapshot. However this pattern becomes too complex to implement in multi-tiered systems.

Checkpoint Recovery Block:

This pattern relies on using alternative flows based on the type of fault, the checkpoint recognizes the type of fault and picks the correct block to use to recover from the error and complete the process.

This approach is extensively while coding, try with multiple catching blocks each handling a different type of exception, however instead of using it within the code of a single layer its taken one step further and used on the process level.

One thought on “Fault Tolerant Process Design Patterns”