In database ACID (Atomicity, Consistency, Isolation, Durability) is a set of properties that guarantee database reliability while processing transactions. Databases being the foundation of most -if not all- IT solutions can be considered the superclass of any transactional system thus such properties are usually inherited such systems. One way to abstract a complex system is as a database with each subsystem being a table.

Keeping these concepts in mind while designing transactional based systems is quite valuable as it yields a stable operable system that would run as expected at a low operational cost; Naturally a cost benefit analysis should precede this as sometimes its actually cheaper to let the system fail and handle failures operationally. These properties within the transactional system design context are:

Atomicity: All or nothing, the transaction should either be successfully completed or rolled back. This can be implemented by exception handling and checkpointing. A partially successful transaction shouldn’t exist, the transaction should either complete successfully or is rolled back to reverse the impact it had on the system.

The complexity of atomicity is a function of the number of systems involved and the actions required to roll back a transaction’s partial impact, furthermore recursive failures must be kept in mind, as there is always a potential for rollback of the rollback to fail. Usually such functionality is achieved by using an order management system (such as OSM) which sees the execution of a transaction across the subsystems and make sure that failures are handled or rolled back.

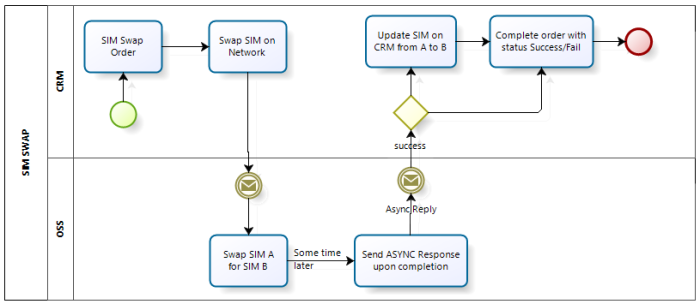

Consistency: Any transaction must bring the entire system from one state to another. For instance if we are doing a sim swap the updated sim must be reflected across all systems, inconsistencies can take place in many ways other than failures (given that Atomicity concepts are well guarded).

One of the most common reasons for data drift is human operational intervention, operations going in and updating some system manually using a database update statement or by invoking an internal system service. Another reason is bad design, instead of relying on system “public” services, subsystems “private” micro-services are directly invoked.

Maintaining consistency can be done by restricting database access and setting standards in regards to micro services invocation and the reliance on public services invoked through the middleware. Constructing a protected / private micro-service is a pattern that should be used more often, to guarantee that future developers will not directly invoke a micro-service that would impact system’s consistency.

Isolation: The concurrent execution of transactions should have the same results as transactions executed serially. A customer updating his bundle and changing the voice mail language should have the same result whether they are implemented in parallel or serial…Siebel usually copies the customer assets into any newly constructed order, and doesn’t update the assets until an order is complete. If both orders run in parallel one will end up rolling back the other (only on Siebel assets) hence you often find that Siebel will only allow a single open order at a time. And if a customer has a failed order that requires operational intervention he can’t do anything until that order has been completed successfully.

Maintaining isolation in a complex environment can be rather complicated, the simplistic solution that is the de-facto best practice is locking out parallel process execution all together piping everything through a single system (Order management/CRM) and making sure that transactions are executed in a serial fashion. More advanced approaches are available such as intelligent business rules about actions that can be conducted in parallel however the cost of such approaches is high and operating them is a nightmare.

Durability: Once a transaction is completed successfully…it remains so in the event of a power outage or a crash. This impacts inflight orders with multiple subflows, in case of an outage the order should resume from where it had stopped, Oracle AIA (oracle pre-fusion middleware) achieved this by the use of the “dehydration points” concept, in which a snapshot of flow is stored in the database as a checkpoint. Oracle AQs (Advanced Queues) guarantee that messages sent between subsystems are kept in none volatile memory and are handled upon outage end.

Designing for durability while working on a high level design can be challenging given that the design should be technology agnostic yet there are a set of best practices such as checkpointing and trying to avoid exceptionally long flows.